- gjenghks@naver.com

- Incheon, South Korea

- Join Research

This research addresses the problem of multi-source light detection (LD) under night driving environments, focusing on the detection of various light sources such as vehicle lights, traffic signals, and streetlights. Unlike traditional binary light detection tasks, this work aims to classify and detect each light source distinctly.

To support this, the authors introduce a novel dataset named YouTube Driving Light Detection (YDLD), consisting of 3,516 images and 116,028 bounding box annotations for three classes: car lights, traffic lights, and streetlights. These images were collected from real driving videos under diverse night and evening conditions.

Fig 1. Red: Streetlight / Green: Traffic Signal / Blue: Car light (Source: Paper Figure 1)

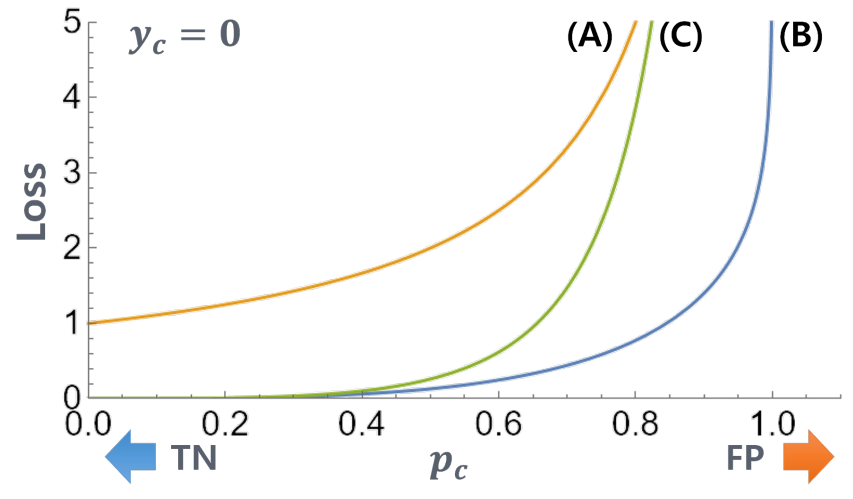

Since light sources are often very small (very tiny) and visually similar, detection performance is typically poor. To address this, the paper proposes several new methods:

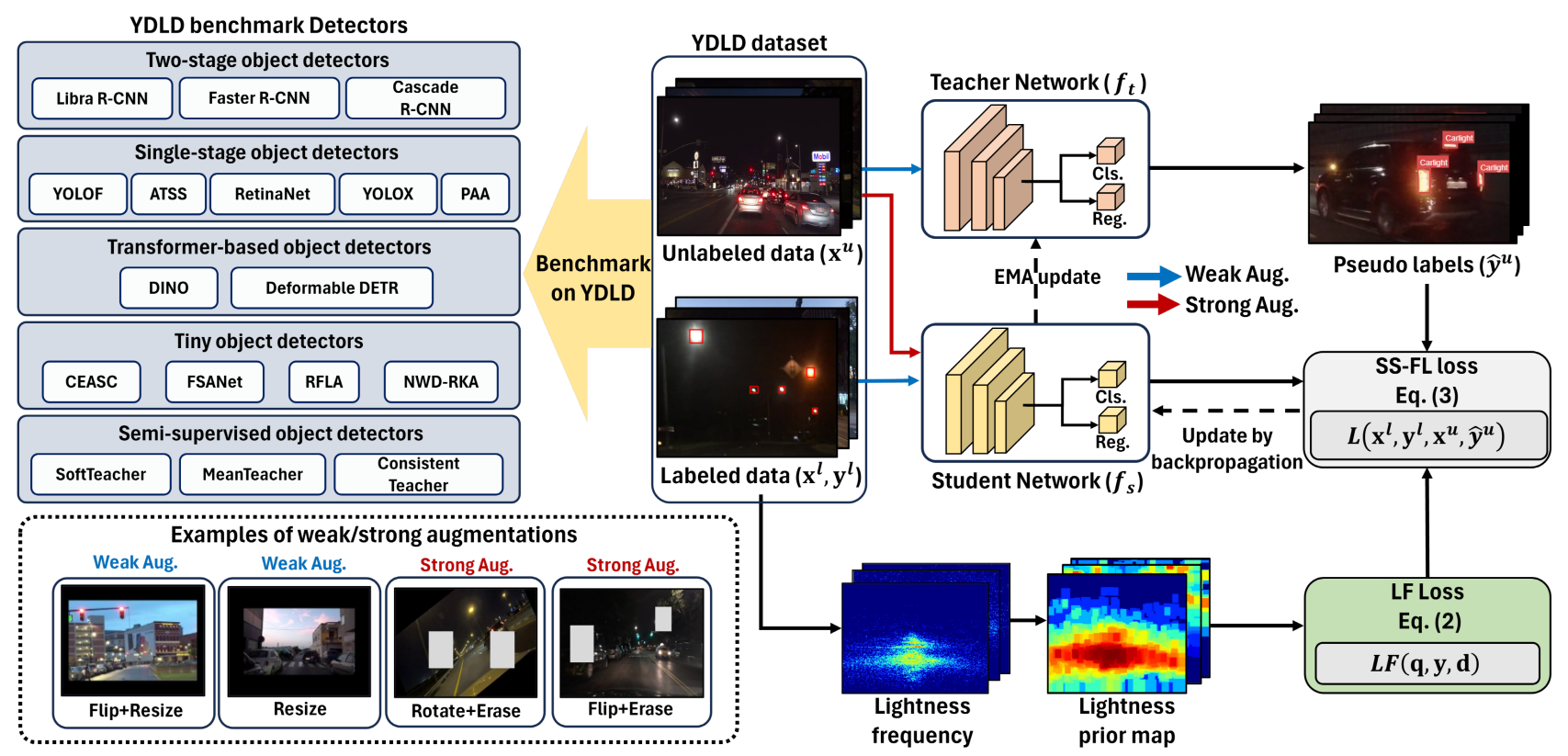

The proposed architecture integrates Lightness Focal Loss and Spatial Attention Prior into a semi-supervised object detection (SSOD) framework. It mainly consists of the following three modules:

Fig 2. SS-FLD Architecture: Integrating LF Loss and Attention Prior into a Semi-Supervised Detection Pipeline (source: paper Figure 3)

Fig 3. Comparison of standard focal loss and proposed LF loss (Source: Paper Figure 4)

Through extensive experiments with state-of-the-art detectors (Faster R-CNN, YOLOX, DINO, etc.), the proposed SS-FLD achieved the best mAP of 26.0 on the YDLD benchmark. It especially outperformed others in detecting very tiny and small objects (APvt, APt), which are critical in light detection.